The Google core algorithm is the Holy Grail for digital marketers and SEOs. This shrouded, maniacally complicated, and ever-changing set of algorithms almost immediately made all other search engines obsolete, made billions of dollars, and has kept SEOs and webmasters scratching their heads since the late nineties.

This set of algorithms has elevated some web content to viral success, and has penalized other content into oblivion. Where did this all-powerful algorithm came from, and how does an online business or content creator appease it?

The Primordial Soup of Search: Before Google

Before Google’s game-changing algorithm, search engines began as directories and archives of information, but grew more intelligent over time. Yahoo! Directory, the Open Directory Project, WebCrawler, AltaVista, and others provided a superficial search. Before WebCrawler in 1994, directories only indexed web page titles, and ignored the content within. Some ranked keywords by counting keywords, thereby rewarding spammy titles.

During the mania of the “dot-com bubble,” the internet exploded in popularity and profitability. Widespread use of computers and internet meant boom times for investors and businesses looking for an exciting new stream of profit. As you might imagine, a newly commercialized internet meant a glut of spam. In those lawless years, bad content reigned.

The Rise of Relevancy

Like any revolutionary idea, the Google algorithm borrowed ideas from existing systems. Sorting articles and information based on relevancy had been developing in scholarly circles since the 17th century, and had developed into bibliometrics, a mathematical analysis of documents. While

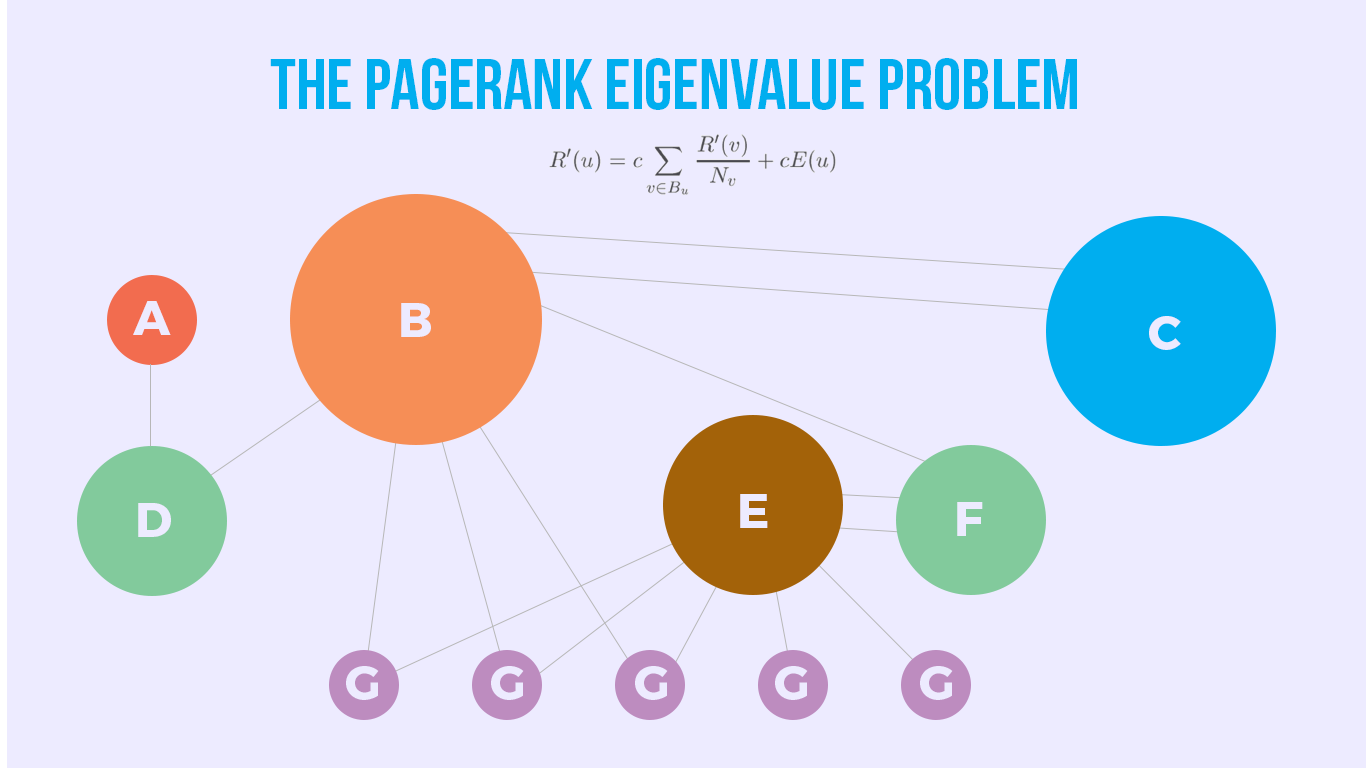

enrolled in the computer science PhD program at Stanford, Google co-founder Larry Page developed a link analysis algorithm, which borrowed from the academic citation model, and applied numerical variables for “weight” and “damping” factors, which produced a nuanced system for ranking links. To us laymen without computer science PhDs, this may sound like gibberish. In simple terms, PageRank considers the popularity, quality, and authority of links in context to calculate the ranking for relevance of search results for a user’s query.

Even in its beta stage, Google’s search engine was hailed for its design simplicity, speed, and (most importantly) meaningful search results. Instead of a simple keyword-counter, Google went in-depth with content, and delivered a more satisfying avenue for surfing the Internet. At the heart of Google’s resounding success was that groundbreaking eigenvalue algorithm.

Algorithm Updates: Caffeine, Panda, Penguin, Pigeon, and RankBrain

As computer technology becomes more intelligent, so, too, must search engines. Although Google’s PageRank system quickly became the world’s favorite search engine, it needed updates to advance. A new industry emerged, in which SEOs (search engine optimizers) catered web content and structure to appeal to Google’s algorithm. These SEOs saw rankings change dramatically in the early 2000’s. The core Google algorithm was evolving.

Google’s “core” algorithm is more of a conglomerate of shifting algorithms. Most of these updates are so subtle, not even veteran SEOs could identify them; others sent shockwaves throughout the Internet. In the early 2000’s, Google set out to do monthly updates, but this was quickly abandoned. Currently, the algorithm changes more than once a day.

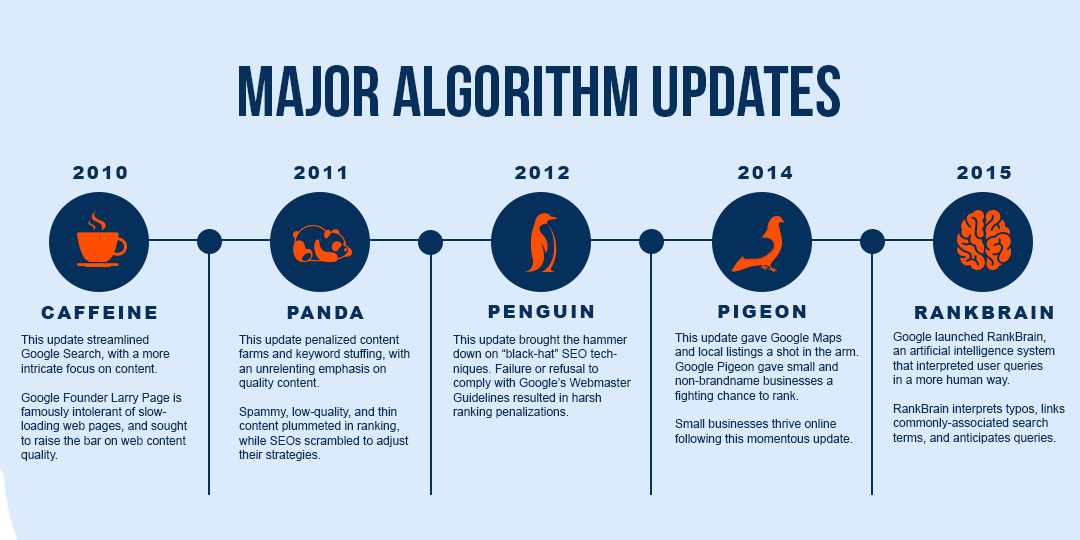

Some of Google’s algorithm updates were very public. In 2010, Google Caffeine was a publicized update that was launched to streamline Google’s search, with a more intricate focus on content. 2011’s Google Panda shook the SEO world by heavily penalizing content farms, and low-quality content. 2012’s Google Penguin update penalized websites and content that didn’t comply with Google’s Webmaster Guidelines, and came down hard on “black-hat” SEO maneuvers. In 2014, Google Pigeon arrived to give local listings and Google Maps a shot in the arm. Google Pigeon was very good for small businesses, in terms of search volume. Google then launched RankBrain in 2015, an artificial intelligence system that interpreted user queries in a more human way. RankBrain knows what words your typos are supposed to be, and links commonly-linked topics.

SEOs are always predicting the next major algorithm change, and Google is fond of dropping hints here and there.

The Big Take-Aways:

Understanding the Google ranking algorithm in its entirety is nearly impossible, since Google is very secretive of the hundreds of intertwined algorithms working in tandem, and most of us don’t have advanced degrees in computer science and mathematics.

But rest assured! Though Google’s ranking algorithm has changed thousands of times, and literally changes every day, the algorithm has been (mostly) consistent in what web content is penalized, and what is promoted.

What the Google Ranking Algorithm Hates:

-

- Spam/Surplus of ads

- Low-quality or “thin” content

- Slow sites

- Bad site structure

- Inconsistency

- Violations of Google’s Webmaster Guidelines

- Hidden text

- Duplicate content

- Illegitimate businesses/scams

What the Google Ranking Algorithm Loves:

- Fast websites

- Reviews/Interaction

- Consistency

- Authority

- Links to and from reputable sites

- Masterful site structure/quality landing pages

- Brand reinforcement

- Results that get results (task completion)

- Long-form content

- Relevant metadata

- Unique Content